💥💥💞💞欢迎来到本博客❤️❤️💥💥

🏆博主优势:🌞🌞🌞博客内容尽量做到思维缜密,逻辑清晰,为了方便读者。

⛳️座右铭:行百里者,半于九十。

目录

💥1 概述

📚2 运行结果

🎉3 参考文献

🌈4 Matlab代码实现

💥1 概述

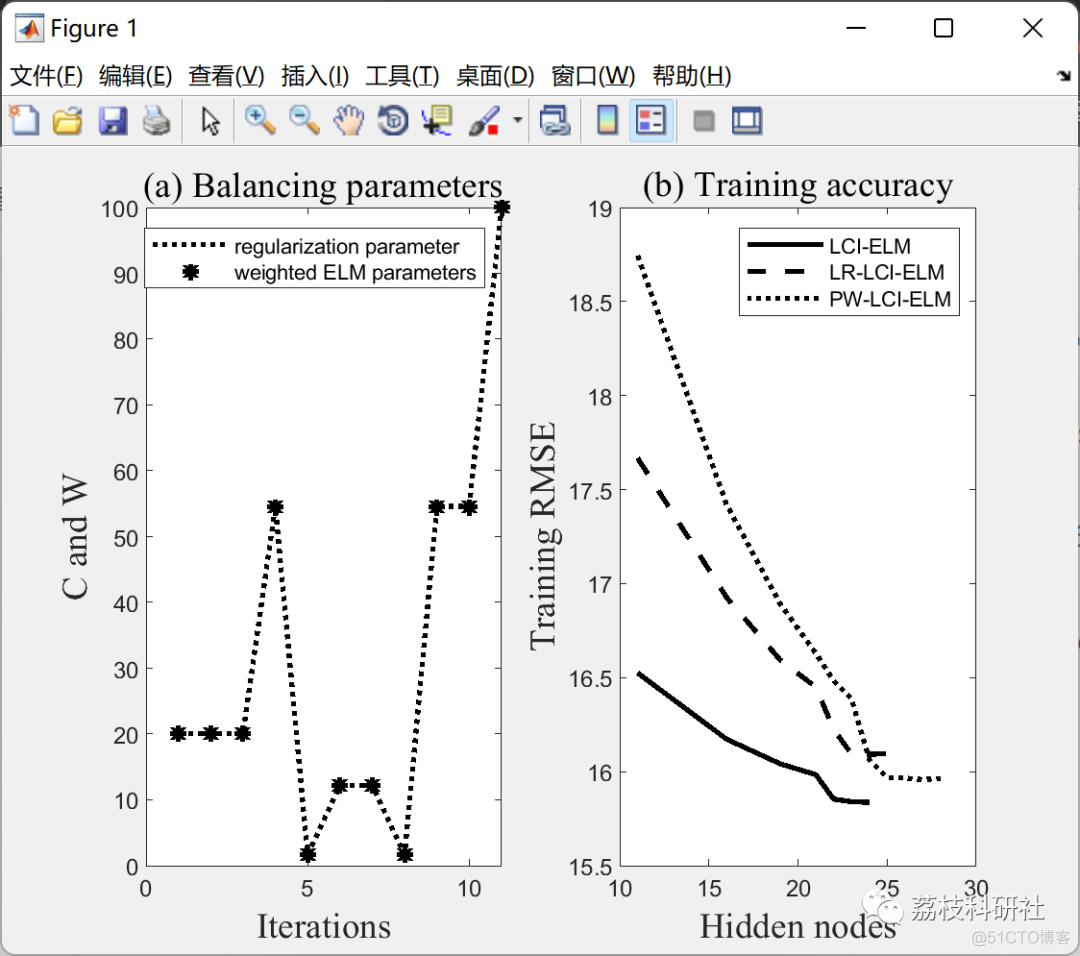

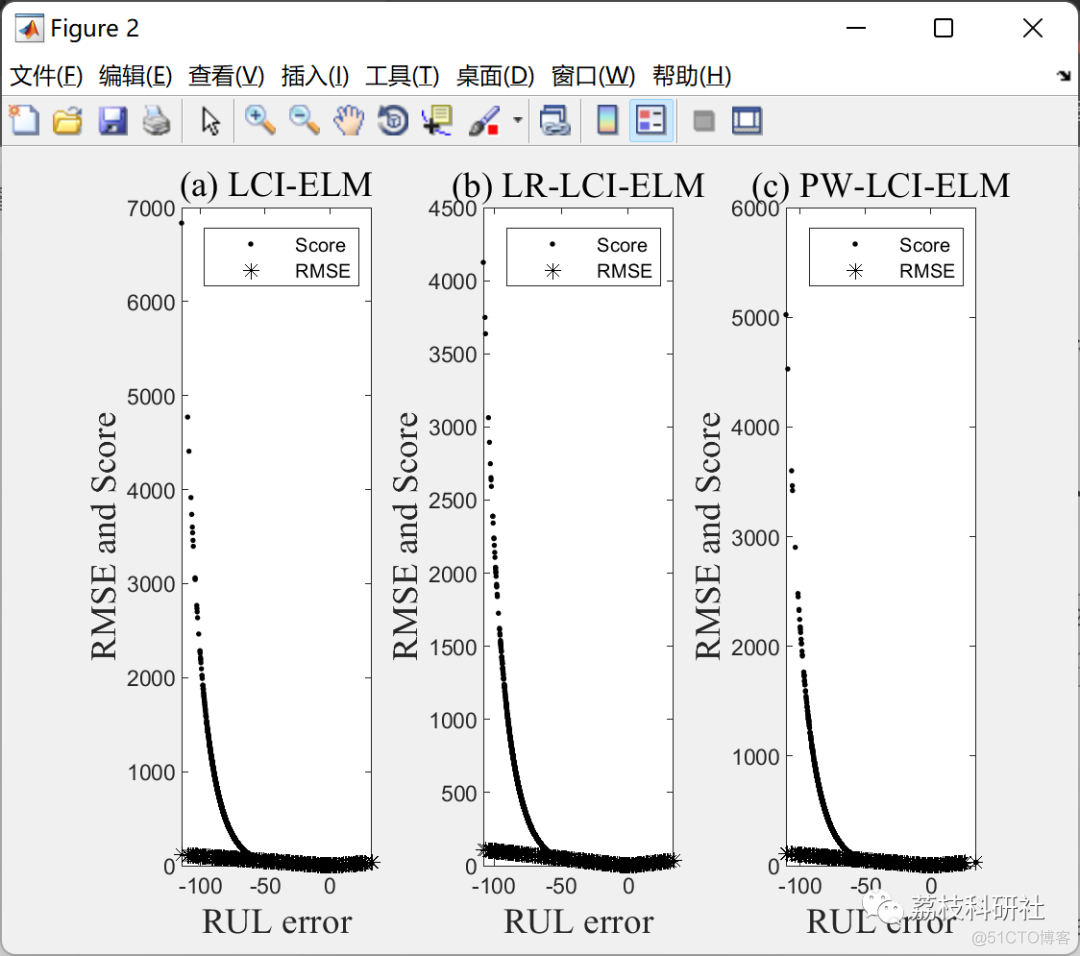

本文引入了 [1] 中提出的 LCI-ELM 的新改进。创新点侧重于训练模型对更高维度“时变”数据的适应。使用C-MAPSS数据集[2]对所提出的算法进行了研究。PSO[3]和R-ELM[4]训练规则被整合在一起,用于此任务。

[1] Y. X. Wu, D. Liu, and H. Jiang, “Length-Changeable Incremental Extreme Learning Machine,” J. Comput. Sci. Technol., vol. 32, no. 3, pp. 630–643, 2017.

[2] A. Saxena, M. Ieee, K. Goebel, D. Simon, and N. Eklund, “Damage Propagation Modeling for Aircraft Engine Prognostics,” Response, 2008.

[3] M. N. Alam, “Codes in MATLAB for Particle Swarm Optimization Codes in MATLAB for Particle Swarm Optimization,” no. March, 2016.

[4] J. Cao, K. Zhang, M. Luo, C. Yin, and X. Lai, “Extreme learning machine and adaptive sparse representation for image classification,” Neural Networks, vol. 81, no. 61773019, pp. 91–102, 2016.

📚2 运行结果

部分代码:

🎉3 参考文献

[1] Y. X. Wu, D. Liu, and H. Jiang, “Length-Changeable Incremental Extreme Learning Machine,” J. Comput. Sci. Technol., vol. 32, no. 3, pp. 630–643, 2017.

[2] A. Saxena, M. Ieee, K. Goebel, D. Simon, and N. Eklund, “Damage Propagation Modeling for Aircraft Engine Prognostics,” Response, 2008.

[3] M. N. Alam, “Codes in MATLAB for Particle Swarm Optimization Codes in MATLAB for Particle Swarm Optimization,” no. March, 2016.

[4] J. Cao, K. Zhang, M. Luo, C. Yin, and X. Lai, “Extreme learning machine and adaptive sparse representation for image classification,” Neural Networks, vol. 81, no. 61773019, pp. 91–102, 2016.