- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

I posted on a previous topic regarding this, but decided to open my own thread, since I got a lot of benchmark data and information that might be helpful.

I've had a four-drive RST RAID-5 array on a i9900K/ ASUS Z390-F that performed OK over several years. Write performance with small files was not good, but at least for bigger files the performance was sufficient. I use the array for RAW photo storage, and once the photos are written to disk, they will just be read and small .xmp files and preview files are written to the disk.

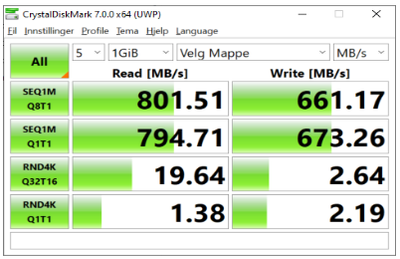

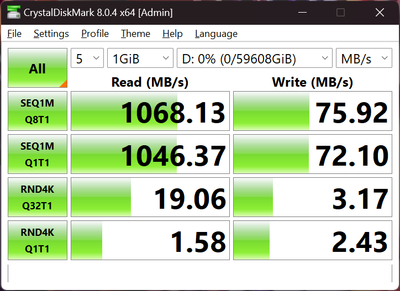

Here's a benchmark from 2019, when the array was created:

Real world performance, big file:

A few months ago one of the drives failed, and I rebuilt the array with a new drive.

I also upgraded the computer with the following specs:

ASUS Z790 ProArt Creator Wifi

ASUS 4070 Proart OC

Intel 14900k

Kingston 2 TB 7300 MB/sec nvme SSD, system (C:) drive

2 x 2TB intel nvme SSD for projects

Corsair 2x32 GB 6000 MHz C40-40-40-77

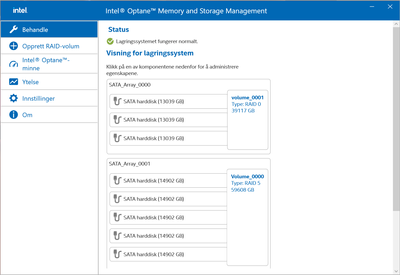

4 x 16 TB Seagate IronWolf Pro in RAID-5 for storage

3 x 14 TB Western Digital 14 TB helium drives in RAID-0 for temp files/rendering projects

Corsair RM750x PSU

QNAP 832X NAS with 7x10 TB Western Digital in RAID-5 connected with 10 Gb ethernet, for backup

I then expanded the RAID-5 array with one extra 16 TB drive, making a total of 5x16 TB. Expanding the array took 10 - TEN - days...

Stripe size is 128 kb.

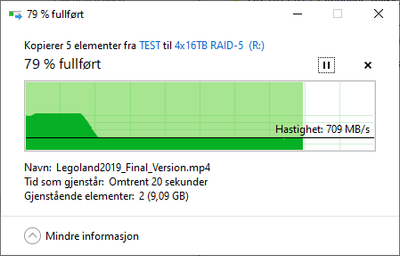

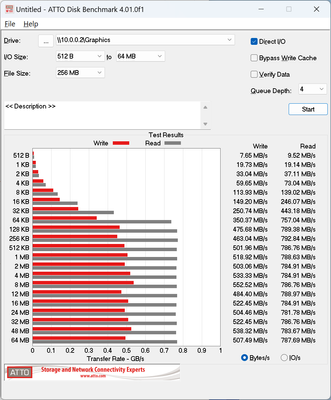

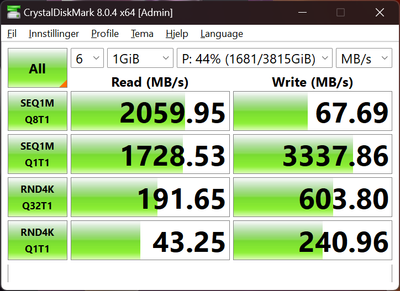

And the write speed is now just horrible:

The array is half full, so the read speeds are within expectations, I'm getting 1000 MB/sec at the start of the array. But write speed is just completely dead.

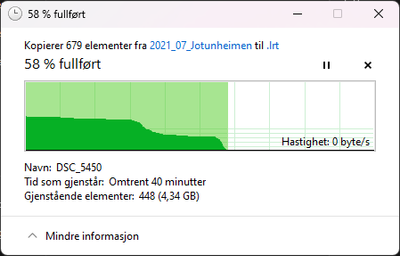

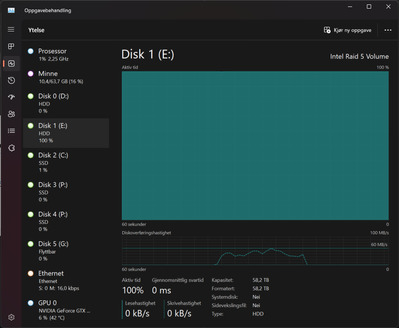

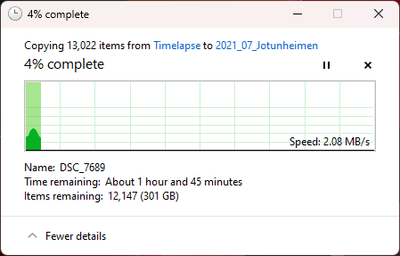

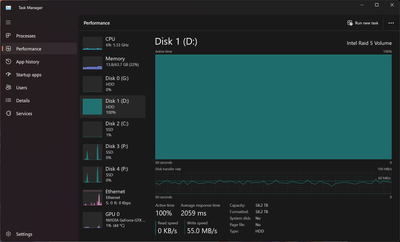

Crystaldiskmark says 68 MB/sec write, but in real world performance I'm getting 18-19 MB/sec write on BIG files. It also seems like the array hangs for a whole minute sometimes, with write speed at ZERO and disk utilization 100%.

Drives are NAS/RAID ready drives rated at 260 MB/sec each.

As you can see, the array hangs for a minute, then transferring with 40-50 MB/sec for 15-20 seconds, then hangs for another minute. Response time varies between 2000-12000 ms, sometimes even more.

I've now tried four different versions of the RST drivers. Both from ASUS homepage and from Intel.com. Rebooted, replugged the drives, changed all components, cables, PSU, virtually tried everything! Tried all write buffer modes, everything!

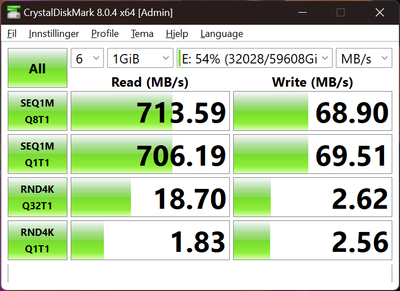

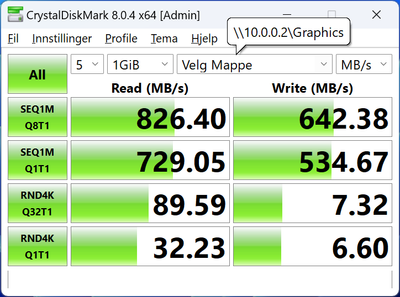

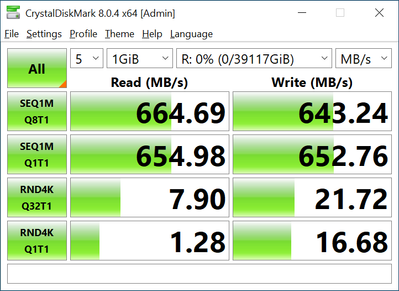

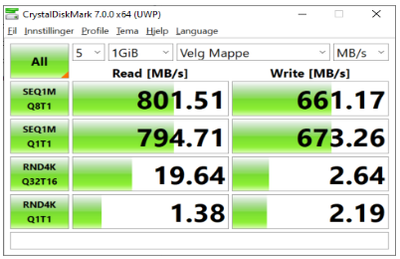

For reference, these are the speeds I'm getting on my old NAS with 7x10 TB drives in RAID-5 over 10 GbE network:

I'm expecting similar write speeds on my 5x16 TB array, but something is very wrong!

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

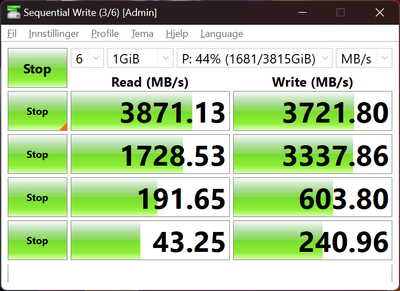

Just got a very strange result when testing my 2x2 TB PCIe SSD project drive:

Retested, and everything was back to normal:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

According to my Book.

Win 11 this is experimental operating system.

Your board is ASUS and therefore ASUS support team is your people so to talk with.

Also according to my Book.

Only an amateur, he would combine a gaming motherboard and so many HDD arrays, and he would expect glitch free performance.

Additionally your benchmark software (all) these aiming to serve as indication of performance, they are not accurate or named as industry standard, so these to be used as hard evidence and a proof of hardware related issue.

My advice, build a second PC for storage handling.

Unload from this basic workstation all unnecessary responsibilities.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ASUS ProArt Z790-CREATOR WIFI is not a gaming motherboard, FYI. Not that it matters.

Users with Gigabyte or MSI motherboards are experiencing identical problems.

I've done some research, and found this pretty interesting post on reddit:

https://www.reddit.com/r/DataHoarder/comments/f99rwu/extremely_slow_write_speed_with_irst_raid5_array/

Copy paste from that post:

"I've setup a 6x8TB RAID5 array on an older ASUS Maximus Hero VII motherboard (4790K) that formats to right at 38TB. I've done testing on each drive individually and get roughly 200MB write speeds but now that I have all 6 drives in the array I'm getting writes in the 5-20MB/sec range - painfully slow.

In testing I created a mirror, then RAID5 with 3, 4, 5 and 6 drives. Here are the different write speeds:

Mirror: 160MB

RAID5 3 drives: 195MB

RAID5 4 drives: 190MB

RAID5 5 drives: 25MB

RAID5 6 drives: 20MB"

Seems to be a bug with the Intel RST driver when using more than four drives.

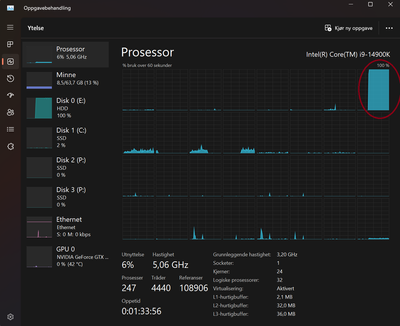

Another thing I discovered when digging into similar cases, was that CPU usage is at 100% for ONE core when writing to the RAID. Indicating that the CPU may be busy running some buggy code...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your final testing this is correct.

Seems to be a bug with the Intel RST driver when using more than four drives.

No its hardware bug, and its not fixable, I have read 50 topics with the same nag and there is not any solution.

In theory CMR HDD they will perform better at any RAID configuration, especially the WD Gold.

But most people does HDD shopping with out proper research, and they come and nag afterwards.

Last practical solution, this is using another add-on Raid controller (PCI-e card).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

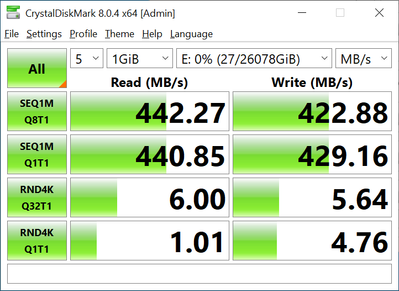

For reference, just tested creating a RAID-5 array with 3x14 TB Western Digital drives:

128k stripe, NTFS 128k block size

Write back cache enabled:

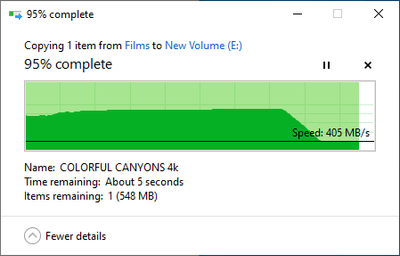

400+ MB/sec with just 3 drives. Very smooth.

Tested also the same 3 drives in RAID-0:

After write cache is filled at very high speeds, I get 600+ MB/sec smooth and stable.

So.. Is Intel RST broken when using more than 4 drives?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So.. Is Intel RST broken when using more than 4 drives?

I would call it as 100% broken, as soon someone test five CMR WD Gold and fail.

I personally wanted best performing RAID1 at my Z87 board which its does support all RAID combinations in paper (server chip set).

This would include OS partition too, I were cautious to select CMR , 512N, 2TB for max compatibility for the specific application.

Assembly of more complex RAID Array this require upfront planing , we do not simply hook up HDD and pray.

We do inspect its one product specifications prior giving our money away.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I set my RAID-5 array up again from scratch, with 5 x 16 TB Seagate IronWolf drives.

Took a couple of weeks to initialize.

128k stripe size, 128k block size, write cache flushing OFF, writeback cache ON.

It definately seems like Intel RST is broken when using more than four drives in RAID-5.

I would expect more than 800 MB/sec write speed if it was working as intented, but no.

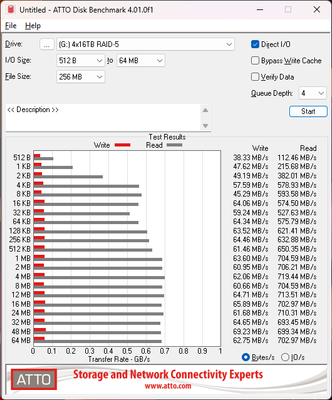

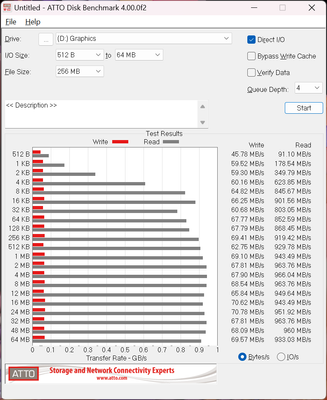

This is a benchmark of the array after initialization completed:

For reference, same array benchmarked a few years ago, but with four drives instead of five.

Anyone with 5 drives in RAID-5 that can confirm the behaviour?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Another benchmark, 5x16 TB RAID-5 array.

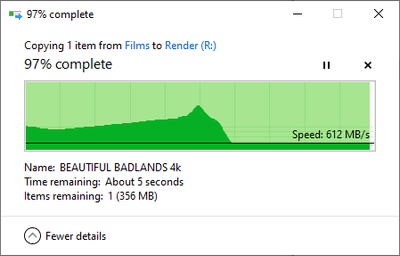

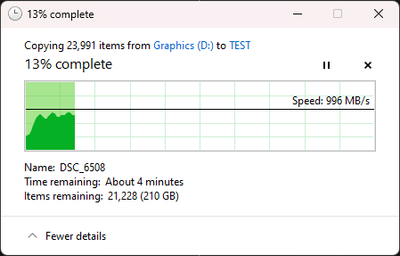

And some real life data:

Copying FROM the array gives roughly 900-1000 MB/sec

Copying TO the array is painfully slow, transfer stops pretty often at 0 bytes/sec.

While task manager says the transfer speed is more steady around 50 MB/sec.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page