What Is Normalization and When to Apply?

Normalization is a technique regularly applied to data preparation in machine learning; it aims to change the numeric columns’ values in the dataset to a standard scale without distorting the value ranges’ differences. We don’t need to apply Normalization to every dataset. It is only necessary when variables have distinct intervals.

For instance, consider the dataset containing two characteristics, age (x1) and income (x2). The age range reaches from 0 to 100 years, while income ranges from 0 to 20,000 or more. Income is about 1,000 times higher than age and with a much more considerable variation in values.

So, these two features are at very different intervals. When we do additional investigations, such as multivariate linear regression, the designated income will significantly influence the outcome due to its higher value. And this causes difficulties during algorithm training.

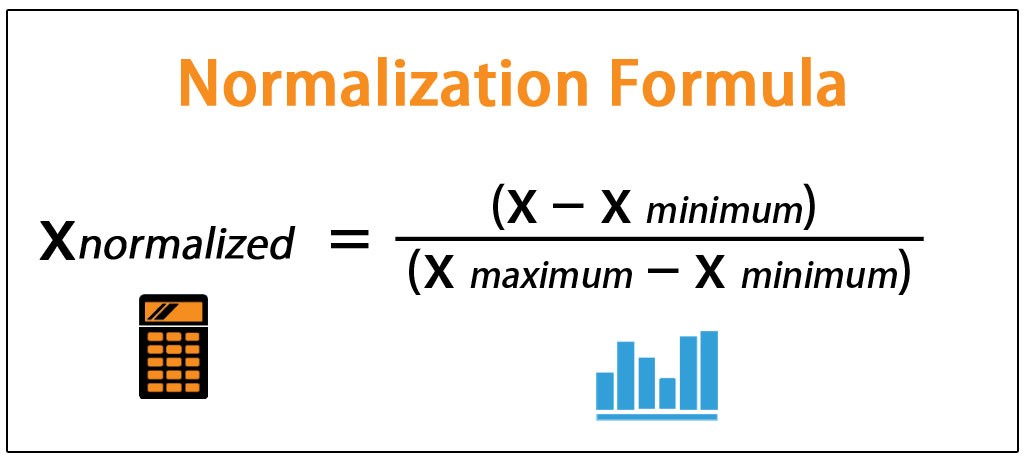

Normalization is called MinMaxScaler. It reduces the range of data, so the interval is set between 0–1 (or -1 to 1 if there are negative values). It works best for cases where standardization may not work as well. If the distribution is not Gaussian or the standard deviation is too tiny, MinMaxScaler works best.

from sklearn.preprocessing import MinMaxScaler

When Is Normalization Important? For distance measurements

Normalization is primarily necessary for algorithms that use distance measurements such as clustering, recommendation systems that use cosine similarity, etc. The Normalization makes a variable on a more extensive scale not affect the result because it is on a larger scale.

Algorithms that require Normalization

Below we list some machine learning algorithms that require data normalization:

- KNN with Euclidean distance measurement if you want all resources to contribute equally to the model.

- Logistic Regression, SVM, Perceptrons, Neural Networks.

- K-Means

- Linear discriminant analysis, principal component analysis, kernel principal component analysis.

Graphical model-based classifiers such as Fisher LDA or Naive Bayes and Decision Trees and tree-based methods such as Random Forest are invariant to resource sizing.

However, it may still be a good idea to resize the data. Normalization will eliminate the model’s interpretation capacity and, therefore, ultimately depend on the business’s need.

And there we have it. I hope you have found this useful. Thank you for reading. 🐼