In 2006, inventor Ray Kurzweil released the book The Singularity Is Near (Amazon Affiliate Link), with a bold prediction that by the year 2049 we’d enter a “technological singularity.”

Around that time, he argued, the pace of improvement in technology would become a runaway phenomenon that would transform all aspects of human civilization. The word “singularity” became a Silicon Valley buzzword, striking fear, hope, and (often) derision into the hearts of millions.

In 2012 Kurzweil released How to Create a Mind (Amazon Affiliate Link), focusing on perhaps the most controversial of his many predictions: that we will create artificial, human-equivalent intelligence by the year 2029, capable of passing the Turing test.

The book argues that the structure and functioning of the human brain is actually quite simple, a basic unit of cognition repeated millions of times. Therefore, creating an artificial brain will not require simulating the human brain at every level of detail. It will only require reverse engineering this basic repeating unit.

I won’t comment on the feasibility of this project, but I want to draw on many of the same sources to offer a more conservative hypothesis: that many of the capabilities of the human mind can be extended and amplified now, using standard, off-the-shelf hardware and software.

Extended cognition will be the bridge from human to artificial intelligence, and construction of that bridge is well underway.

In this article I summarize some of Kurzweil’s arguments, and draw lessons for our understanding of Personal Knowledge Management today.

Think of your favorite song. Try to start singing it from a completely random starting point. You’ll find it’s difficult. You either want to start at a natural break point like a verse or chorus, or you have to play it in your head from the beginning in order to “find” a random spot.

This implies that our memories are organized in discrete segments. If you try to start mid-segment, you’ll struggle for a bit until your sequential memory kicks in.

Do you know your social security number by heart? Now try reciting the numbers backward. You’ll find it’s very difficult or impossible to do without either writing them down, or at least visualizing them in your head.

This implies that your memories are sequential, like symbols on a ticker tape. They are designed to be read in a certain direction and in order.

Now think about a simple habit like brushing your teeth. If you look closely, it consists of many small steps: pick up the toothbrush, squeeze some toothpaste onto the bristles, turn on the faucet, wet the bristles, and so on. If you look even closer, each of these small actions contains many even smaller steps, down to individual muscles flexing and neurons firing. All of this activity you perform effortlessly, each sequence triggered by a decision.

This implies that your memories are nested. Every action and thought is made up of smaller actions and thoughts.

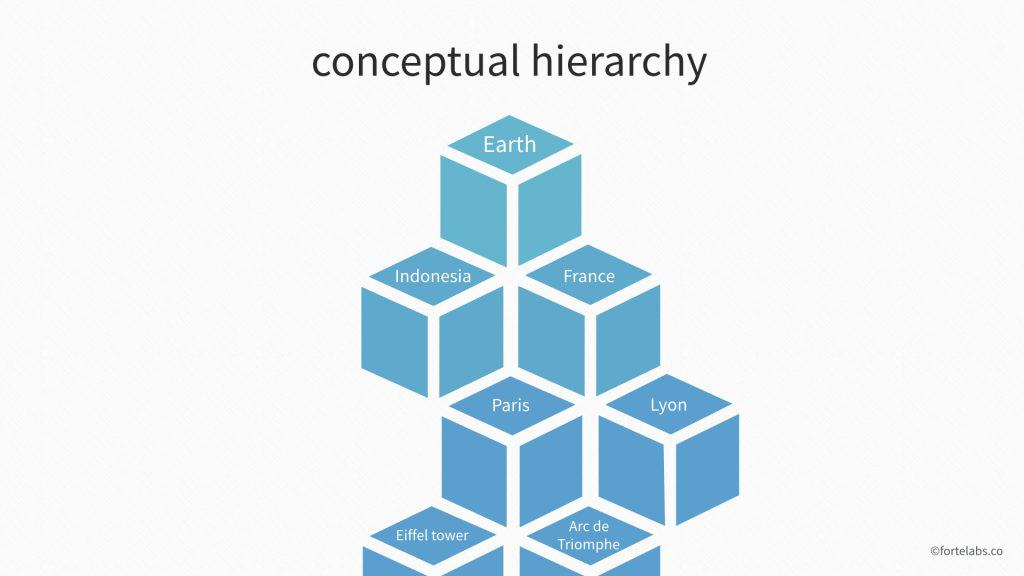

Now put these three characteristics together. Which structure best describes nested segments organized in sequential order?

A hierarchy.

The basic structure and functioning of the human brain is hierarchical. This may not seem intuitive at first. It sounds like how a computer works.

But consider how we use language. Our brains are able to collect a pattern – of ideas, images, emotions, experiences, facts, people – and encompass all of it with a word label, like “Indonesia.” Then we get that label, and use it as an element in another pattern, which we give a name, like “Southeast Asia.” Then we get that pattern, and put it in yet a higher-order pattern, like “Earth.”

Language evolved to take advantage of the hierarchical structure of our brains. Every concept is made up of smaller concepts, all the way down to the most fundamental ideas. We call this array of recursively linked concepts our “conceptual hierarchy.”

But why would our brains have evolved to be hierarchical before language?

Because reality itself is hierarchical: trees contains branches; branches contain leaves; leaves contain cells; cells contain organelles. Buildings contain floors; floors contain rooms; rooms contain doorways, windows, walls, and floors. Every object in the universe has parts, and those parts are made up of even smaller parts.

Massively parallel pattern recognition

So if human cognition is hierarchical, what are these hierarchies made up of?

Patterns.

The human brain has evolved to recognize patterns, perhaps more than any other single function. Our brain is weak at processing logic, remembering facts, and making calculations, but pattern recognition is its deep core capability.

Deep Blue, the computer that defeated the chess champion Garry Kasparov in 1997, was capable of analyzing 200 million board positions every second. Kasparov was asked how many positions he could analyze each second. His answer was “less than one.”

So how was this even a remotely close match?

Because Kasparov’s 30 billion neurons, while relatively slow, are able to work in parallel.

He is able to look at a chess board and compare what he sees with all the (estimated) 100,000 positions he has mastered at the same time. Each of these positions is a pattern of pieces on a board, and they are all available as potential matches within seconds.

This is how Kasparov’s brain can go head to head against a computer that “thinks” 10 million times faster than him (and also is millions of times more precise): his processing is slow, but massively parallel.

This doesn’t just happen in the brains of world chess champions.

Consider the last time you played tennis (or another sport)². As light from the bouncing tennis ball hit your eyes, photoreceptors turned that light into electrical signals that were passed along to many different kinds of neurons in the retina.

By the time two or three synaptic connections have been made, information about the location, direction, and speed of the ball has been extracted and is being streamed in parallel to the brain. It’s like sending a fleet of cars down an 8-lane freeway, instead of a bullet train down a single track – some cars can depart as soon as they’re ready, without having to wait for the others.

The way our brains work is through massively parallel pattern recognition. And the organ that has evolved to perform this activity is the neocortex.

The neocortex

The neocortex is an elaborately folded sheath of tissue covering the whole top and front of the brain, making up nearly 80% of its weight. It is responsible for sensory perception, recognition of everything from visual objects to abstract concepts, controlling movement, reasoning from spatial orientation, reason and logic, language – basically, everything we regard as “thinking.”

For our purposes, the most important thing to understand about the neocortex is that it has an extremely uniform structure.

This was first hypothesized by American neuroscientist Vernon Mountcastle in 1978. You would think a region responsible for much of the color and subtlety of human experience would be chaotic, irregular, and unpredictable. Instead, we’ve found the cortical column, a basic structure that is repeated throughout the neocortex. Each of the approximately 500,000 cortical columns is about two millimeters high and a half millimeter wide, and contains about 60,000 neurons (for a total of about 30 billion neurons in the neocortex).

Mountcastle also believed there must be smaller sub-units, but that couldn’t be confirmed until years later. These “mini-columns” are so tightly interwoven it is impossible to distinguish them, but they constitute the fundamental component of the neocortex. Thus, they constitute the fundamental component of human thought.

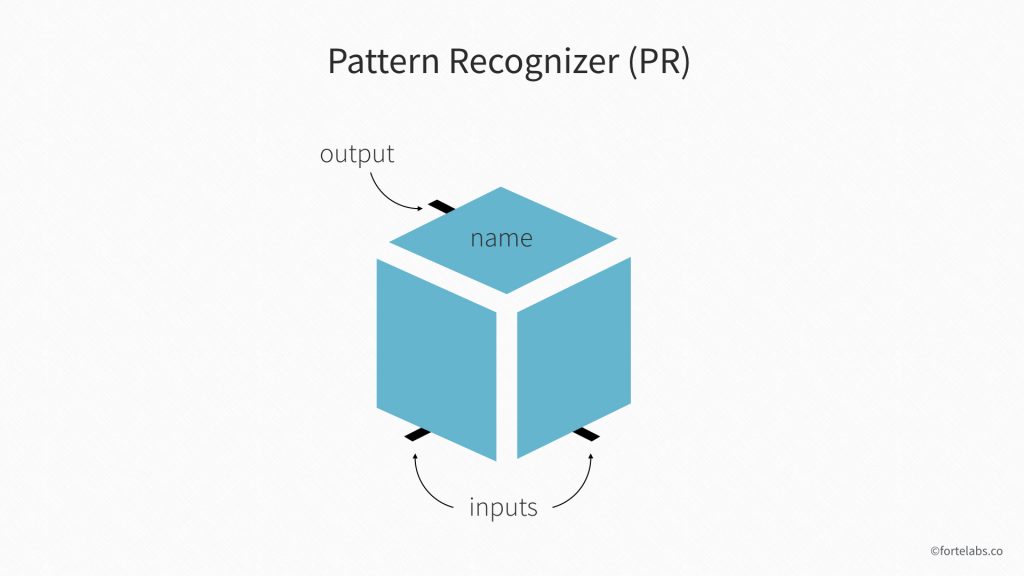

Pattern Recognizers (PRs)

We’ll call these cortical mini-columns Pattern Recognizers, or PRs for short. Each PR contains approximately 100 neurons, and there are on the order of 300 million of them in the entire neocortex.

The basic structure of a PR has three parts: the input, the name, and the output.

The first part is the input – dendrites coming from other PRs that signal the presence of lower-level patterns (generally, dendrites and axons are both nerve fibers, but dendrites receive neuron signals and carry them toward the neuron, while axons transmit nerve signals to other neurons³).

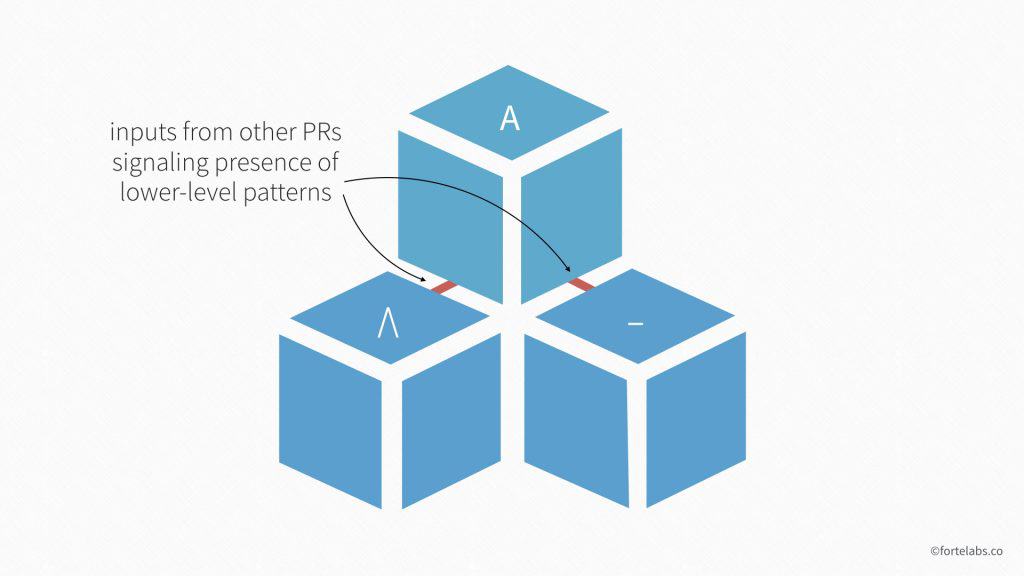

Reading a book and seeing certain shapes – like two conjoined diagonal lines combined with a crossbar – will trigger the inputs to a higher-level pattern, like the letter “A.” The shapes are the “inputs” to a PR dedicated to recognizing the letter “A.”

The second part of a PR is its “name.” That is, the specific pattern it is designed to detect. Although some PRs are coded to recognize language, these patterns are not limited to letters and words. They could be shapes, colors, feelings, sensations – basically anything we are capable of thinking, learning, predicting, recognizing, or acting on.

In the example above, “A” is the name of the PR designated to recognize the letter A.

The third part is the output – axons emerging from the PR that signal the presence of its designated pattern.

When the inputs to a PR cross a certain threshold, it fires. That is, it emits a nerve impulse to the higher-level PRs it connects to. This is essentially the “A” PR shouting “Hey guys! I just saw the letter “A”!” When the PR for “Apple” hears such signals for a, p, p again, l, and e, it fires itself, shouting “Hey guys! I just saw “Apple!” And so on up the hierarchy.

PRs for lower-level concepts (like the letter “A”), when fired, become the inputs for higher-level concepts, like the word “Apple.” Once a PR for “Apple” fires, it may form part of a still higher-level concept, like the sentence “An Apple a day keeps the doctor away.”

Eventually (after fractions of a second), all these inputs and outputs bubble up and emerge into consciousness, assembled into such abstract concepts as attractiveness, irony, happiness, frustration, and love.

The power of hierarchical thinking combined with massively parallel processing is that lower-level patterns do not need to be endlessly repeated at every subsequent level. The PRs for “Apple” or any other word that contains the letter “A” don’t need to fully describe the “A” pattern. They can all just “link” to the PRs that recognize that letter, much like a webpage can have hyperlinks to and from many other webpages.

We create the world as we discover the world

What’s important to know about this conceptual hierarchy is that signals flow downward as well as upward.

Higher-order PRs are actively adjusting the firing thresholds for lower-level PRs they’re connected to. If you’re reading left to right and see the letters A-P-P-L, the “Apple” PR will predict that it’s likely the next letter will be “e.” It will send a signal down to the “e” PR essentially saying “Please be aware there is a high likelihood that you will see your “e” pattern very soon, so be on the lookout for it” (neurons are very polite).

The “e” PR will then lower its threshold (increasing its sensitivity) so it’s more likely to recognize its letter.

The neocortex is not just recognizing the world. It is always attempting to predict what will happen next, moment by moment. If it expects something strongly enough, the recognition threshold may be so low that it fires even when the full pattern is not present.

This is the neurological basis for how our narratives become our reality. You can find evidence for anything if your neocortex is looking for it hard enough. You literally see what you expect to see, and hear what you expect to hear.

These downward signals can also be negative or inhibitory. If you are holding a higher-level pattern called “my wife is in Europe,” PRs dedicated to recognizing your wife will be suppressed. That is, their recognition thresholds will be raised. They can still fire if, for example, you see your wife in the checkout line at the grocery store. But it will take more evidence, and you’ll do a double take.

This is the neurological basis for blindspots. If you don’t expect to see opportunities, upsides, or possibilities, you will become less likely to recognize even the ones that do show up. Thus your narrative is reinforced, making it even harder to see them.

The word “recognition” is actually a stretch.

What the mind is doing when it “recognizes” an image is not matching it against a database of static images. There is no such database in the brain. Instead, it is reconstructing that image on the fly, drawing on many conceptual levels, mixing and matching thousands of patterns at many levels of abstraction to see which ones fit the electric signals coming in through the retina.

According to this model, recursively stepping through hierarchical lists of patterns constitutes the language of human thought.

Patterns triggered in the neocortex trigger other patterns. Partially complete patterns send signals down the conceptual hierarchy, fitting new lenses to the data. Completed patterns send signals up, fitting new data to the lenses. Some patterns refer to themselves recursively, giving us the ability to think about our thinking or to “go meta.” An element of a pattern can be a decision point for another pattern, creating conditional relationships. Many patterns are highly redundant, with PRs dedicated to linguistic, visual, auditory, and tactile versions of the same object, which is what allows us to recognize apples in many different contexts.

The basic unit of human cognition

This Pattern Recognition Theory of Mind for how the neocortex works offers a radical possibility: that the basic unit of cognition is not the neuron, but the cortical mini-column (i.e. pattern recognizer). In other words, the idea that “neurons that fire together, wire together,” which emphasizes the plasticity of individual neurons and is known as the Hebbian Theory, may be incorrect.

Swiss neuroscientist Henry Markram, in his investigation of mammalian neocortices, went looking for “Hebbesian assemblies” (neurons that had wired together). What he found instead were “elusive assemblies [whose] connectivity and synaptic weights are highly predictable and constrained.” He speculated that “[these assemblies] serve as innate, Lego-like building blocks of knowledge for perception and that the acquisition of memories involves the combination of these building blocks into complex constructs.”

In other words, learning is not a matter of individual neurons wiring together in endlessly complex, unique configurations. Instead, the basic architecture of cortical columns makes up an orderly, grid-like lattice, like city streets.

The brain starts out with a huge number of these “connections in waiting.” When two PRs want to connect to store a pattern relationship, they don’t need to extend a dendrite across whole brain regions. They just hook up to the nearest axons, like new apartment buildings hooking up to the municipal water system.

In this model, learning is not a matter of reconfiguring or building physical structures (which would be difficult and energy-intensive). It is a matter of connectivity between highly uniform pattern recognizers. What changes as we learn and experience things is the connectivity between these modules. The plasticity of our brains comes not from the fact that we can easily construct new nerve fibers or neurons, but that changing these connections is almost plug-and-play.

We are told continuously that the brain is hopelessly complex. But it could also be hopefully simple, a basic unit of cognition repeated millions of times.

Dharmendra Modha, manager of Cognitive Computing for IBM Research, writes that “neuroanatomists have not found a hopelessly tangled, arbitrarily connected network, completely idiosyncratic to the brain of each individual, but instead a great deal of repeating structure within an individual brain and a great deal of homology across species…. The astonishing natural reconfigurability gives hope that…much of the observed variation in cortical structure across areas represents a refinement of a canonical circuit; it is indeed this canonical circuit we wish to reverse engineer.”

We are the sum of our connectivity.

Takeaways

The model described above has many implications for our understanding of cognition, learning, knowledge, and even consciousness. But I want to focus on the implications for our endeavor of building a “second brain.”

We can build a second brain

The repetitive simplicity of cortical columns and PRs gives us hope that this won’t be an impossible task. It is actually simpler to build a whole brain at a high level of abstraction, than trying to model every chemical and atomic interaction. Just like we’ve built an artificial pancreas by duplicating its functionality, not simulating every tiny islet cell. The world wide web is an early example of extending a single capability, communication, to a massive scale. Imagine if we did the same with all the other activities of our brains.

The truth is, we already have multiple brains. As I’ve written about previously, we have always extended our cognition into our tools and our environment. Even purely biologically, there is plentiful evidence that brain regions operate semi-independently and can substitute for each other. People born without certain brain regions and even missing an entire hemisphere can lead perfectly normal lives. The natural plasticity already found within our biological brain should make the possibility of additional extension more likely.

Our minds have a turning radius (or transaction cost)

The phenomenon of action potentials slowly climbing their way up a conceptual hierarchy explains a lot we’ve observed about how the brain works.

The Zeigarnik Effect describes how, when people move on from an incomplete task, the details of that task remain as a sort of “cognitive residue” for some time afterwards. You may have noticed, when stepping away from an intensely focused activity, it takes awhile for your mind to “let go” of the problem. There is a limit to how fast you can switch tasks, because changing context requires “desaturating” the neurons before resaturating them with other thoughts.

Traversing each level takes between a few hundredths to a few tenths of a second. Thus a moderately high-level pattern such as a human face can take as long as an entire second to recognize.

These cognitive transaction costs can be understood as a “turning radius.” The more patterns you have loaded up into multiple levels of your hierarchy, the faster you can make progress, as you recognize patterns everywhere and everything seems to connect to everything else.

But with that momentum you sacrifice agility, as “loading up” a different context is a biological process with physical constraints. It’s like trying to turn onto a side street while going 90 miles per hour. Even computers have such a turning radius, by the way: when you restart your computer or reset your RAM, you are “flushing” the memory of the information it’s been working on.

What’s exciting about this is it implies a floor for the smallest size packet of work it makes sense for a human to work on. Smaller batch sizes are powerful, but does this mean we should be working in 30-second chunks? No. The bottom limit of our work sessions is defined by our mental transaction costs, i.e. by how quickly we can activate, deactivate, and reactivate our pattern recognizers.

Desired outcomes are lenses

This model could also explain the neurological basis of the law of attraction, the belief that “by focusing on positive or negative thoughts people can bring positive or negative experiences into their life.” Setting an intention or formulating a desired outcome is not just wishful thinking. It may actually be our conscious mind triggering a cascade of signals that either inhibit or strengthen connections at every lower level, even the unconscious ones. This is sometimes called the reticular activating system.

There is effectively infinite information we could take in through our senses, and therefore an infinite number of interpretations of what it means. Like a GPS system that leads us through any terrain to get us where we want to go, the conceptual hierarchy of our mind is designed to surface what we decide is important and valuable. Whether that includes opportunities and possibilities or problems and challenges is up to us.

Randomness is a feature

Paradoxically, the discovery that our brain is highly ordered and regular actually makes randomness even more important. Cortical mini-columns have been found to be so tightly interwoven, that they “leak” action potentials into each other. This is a feature, allowing us to think thoughts outside the strictly logical (like in art, music, dance, etc.).

Similarly, the purpose of P.A.R.A. is to create sandboxes where the contents of notes can leak into each other. The purpose of RandomNote is to allow even notes in different notebooks to encounter each other. The purpose of Progressive Summarization is to allow ideas and phrases to stay in their raw form for as long as possible, where they remain available for “mixing” into a wider variety of “idea recipes.”

The role of our biological brain will be as an “expert manager”

What will our first, biological brain do once we have all these technological systems in place? It will become an “expert manager.” This term comes from experiments in which a software program is put in charge of selecting and managing a collection of other, more specialized software programs.

Watson, the IBM computer that famously beat the top Jeopardy champions, worked exactly this way. Its Unstructured Information Management Architecture (UIMA) deploys hundreds of different subsystems. The UIMA knows the strengths and weaknesses of each problem-solving subsystem, and mixes and matches them together to solve the problem it’s presented with. It can incorporate answers from subsystems even when they don’t know the final solution, by using them to narrow down the problem space. The UIMA can also calculate the confidence of its answer, just like a human brain.

This is a model for how our biological brains could manage cognitive extension. Interestingly, professional knowledge tends to be more organized, structured, and less ambiguous than common sense knowledge. I think we’ll increasingly see a “division of cognition” where we outsource subject matter knowledge to external systems, but keep our own common sense and general knowledge in the captain’s chair.

These expert systems will serve us like army staffs serving a general: thinking up and pre-planning numerous courses of action, and presenting them to us as possibilities for approval. In science fiction stories, these alternative futures are spun up into simulated universes running thousands of times faster than real time, which then report back to us their outcomes.

The mind as computer

The Pattern Recognition Theory of Mind asks the question, “What if the human brain was a computer?” and then takes its conclusions to the furthest extremes. It is not “true” or “correct,” any more than historical analogies comparing the brain to a steam engine.

But it is potentially useful. Paradoxically, a conceptual hierarchy made up of massively parallel pattern recognizers would explain a lot about our subjective experience. The feeling that something is “on the tip of the tongue” could be pattern recognizers firing below the level they become conscious. The certainty of “I know it when I see it” could be combinations of PRs firing without a corresponding, higher-order word label. Our intuition acquires new depths when it isn’t limited to conscious patterns.

Innovations in deep learning famously came from examinations of the human brain. Maybe now it’s time for the human brain to learn from computers.

Note: I’ve published this as a free article because I would really like feedback on it, especially from neurologists and other brain experts. I know the theory is not the “right” one, but it has huge implications for what I’m working on and I’d like to know what exactly are its limits. I’m not looking for hot takes like this popular Aeon article, which specifically calls out Kurzweil’s book without the author having read it (in my estimation, based upon his critiques). You can email feedback to [email protected].

Follow us for the latest updates and insights around productivity and Building a Second Brain on Twitter, Facebook, Instagram, LinkedIn, and YouTube. And if you're ready to start building your Second Brain, get the book and learn the proven method to organize your digital life and unlock your creative potential.

1: How to Create a Mind (Amazon Affiliate Link), by Ray Kurzweil (my notes here)

2: http://nautil.us/issue/59/connections/why-is-the-human-brain-so-efficient

3: http://www.differencebetween.net/science/difference-between-axons-and-dendrites/

- Posted in Book summary, Books, Cognitive science

- On

- BY Tiago Forte