On configurability or configuration driven development

Continuing the discussion of best practices in RPA development, I am going to talk about configurability or perhaps configuration driven development in this article. While writing small workflows/functions/methods ease your cognitive load and make changes easier to do by keeping things that need to change (for the same reason) in one place, being configurable means porting things that are frequently changed outside of the codes so that you don't even need (or spend very little time perhaps) to make a new release when such need for changes arise.

Want it or not, change is a constant in the software development lifecycle. It could be just simple changes to the environment the solutions are running on or, not so surprisingly, changes in the upstream systems that send failures (caught and uncaught) to the downstream. Modern software development promote frequent, small changes and strive towards the ideal of flexible, scalable software. Therefore, anticipating and preparing for changes must be a consideration in designing and developing the solutions.

"Software engineering is programming integrated over time" - Multiple authors, Software Engineering at Google

Generally, in RPA context, there are 3 kind of changes: changes in the environment the codes operate on, changes in the input/output systems, and lastly, changes in the rules of the business process. I used to think it can only be matter of grabbing my magnifying glass, tracing it to the right code areas and adding extra if/else or case statements. But there are better ways.

What inspired me was a gem I found in one of the chapters from the classic Programming Pearls by John Bentley. The philosophy is: don't write a big program when a little one will do. Let the data structure the program, not the other way around. Data can structure a program by replacing complicated code with an appropriate data structure. Although the particulars change, the theme remains. Although space reduction and code simplification were the main motivations of this philosophy, good data structure design can have other positive impacts, such as increasing portability and maintainability. Portability and maintainability are the must-haves when dealing with changes.

"Representation is the essence of programming" - Fred Brooks, The Mythical Man Month

Data structures is a deep topic, however there are two tools for data structures I have used repeatedly for solutions in RPA. These are my first go-to choices because of their easy-to-use, easy-to-change and popularity. Also, they address almost all of the programming problems I have encountered in RPA. If the problems require more complicated data structures, chances are they would not be RPA projects anyway (or yet).

Key-value Pairs

There are many different forms and names for this data format. But at its core, the idea is simple: there is a value for one key. The key must be unique but we can do anything with the values, whether it's a single value or a list. And in the age of Internet, nothing can be fitter for the job than JSON (JavaScript Object Notation).

I use JSON to store operational details that are likely to changes such as the folders bots have to poll and output to, the list of recipients bots have to notify or the maximum time I wait for a service to return. All components of the same solution should point to the same JSON file or address and we too can make it configurable which JSON the code should read from in run time.

At Medline, we use a single JSON file for store all the configurable variables for a project. Specifically, all component packages such as dispatcher, performer and wrapper should point to an asset that store the JSON content. This JSON content would be available to view from Orchestrator. With a simple search, any support person can come in and change the values without the need to make code changes.

Recently, I was tasked with extending a current solution to a new customer group, for which a different team of agents are servicing. Although the rules and steps are the same, the folders, the mailboxes, the process owners are completely different. Cloning the current solution and adapt it to the new one is convenient but I do not want the potential headache of maintaining almost two identical repositories in the future. Therefore, I make a new JSON to store those different operational details, from which bots can get the desired parameters during runtime.

Having all operational parameters in one place would also come very handy for credential management. Let's say a subset of credentials bot are using expire one day without notice. A typical response would be shutting down the bots while having those credentials renewed. Depending on the situations, we could be talking about a blackout that range from few hours to few days. A better practice is having two set of credentials on inventory. When one set is down, all we need to is change the config JSON so that bots would point to a different set of credentials.

{

"RootFolder": {

"Dev": "SharedDrive\Parent\Child\ProjectName\PackageName",

"Prod": "DifferentSharedDrive\Parent\Child\ProjectName\PackageName"

},

"SAPCredentialAssetName": "DepartName_GroupName_SolutionName_SAPCreds"

}

There is no doubt that JSON is a highly dynamic data structure and a powerful tool for programming needs. However, there are times representing decisions and logics in JSON would result in extremely nested structures that would defeat the purposes of easy-to-use, easy-to-change. In such cases, let's go to my second go-to tool: table (or spreadsheet).

Table-Driven Methods

Steve McConnell had a whole chapter dedicated for these methods in Code Complete. The main idea is that if we represent all the if/else, case statements in the program in a table format, we can get rid of a lot of codes in program, and in some cases, improve the program performance. The most basic form of table-driven methods is indexed access table. The program reads the table at startup, use the primary data to look up a key in the table and access the main data we're interested in.

I used this method most often to organize the message code of the program. In a medium to complex project, the number of scenarios that bots would stop due to both expected and unexpected situations can be over 20. If I'm interested in looking for a particular error message to word it differently or there is a need to update all error message, I would have to trace down the particular place where this error occurred and made that change. The process is tedious and very error-prone. By using the indexed access table and I only have to change the table and it is outside of the code.

In another cases, I was able to trim a lot of codes by using indexed access table to store the parameters of a function. Let's say I want to extract a phrase between two phrases and I have close to 20 different phrases to extract. In this case, I lay out the table like the following: the primary data is the field name, one column for prefix phrase, one column for the target phrase and one column for suffix phrase. The program now is just a simple loop on each row of this table, and use the values from other columns to provide the extraction function what it need to do its jobs. During testing, it's amazingly easy to change the details in this table rather than search for where the code line is in the program.

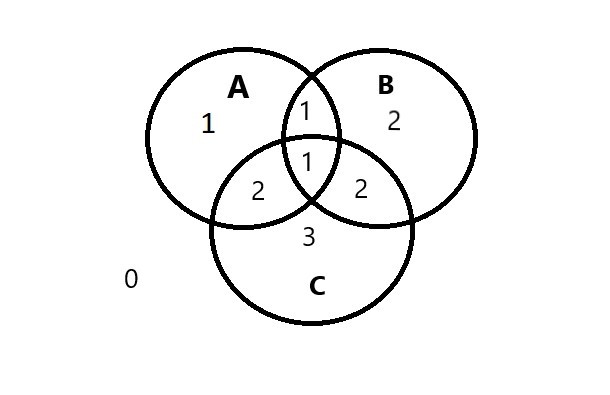

We can also represent combinations of conditions in table format. It is usually applied in a situation to categorize something and then to take an action based on its category. Suppose you want to assign an action based on the current situation you're in, which can be broadly defined as the combination of 3 conditions: A, B, C.

Steve McConnell suggested replacing the following complicated pseudocodes like this:

with this:

To me, this table is easier to understand and also does the job:

Let's say one day my clients tell me they want to apply the current automation to their new customer but there is one difference in business rules. On the 7th row in table above, they want it to be defined as category 3. Instead of figuring out where I need to change and if I miss any other logics, I just need to modify this table and go into regression testing. Although it's one simple change, it's quite many hours of thinking and sketching a year ago when I designed this table.

"Software's primary technical imperative is managing complexity." - Steve McConnell, Code Complete

It could not be truer that software's primary technical imperative is managing complexity. Identifying areas that are complex and/or likely to change and find a way to put it outside of the codes are key. I found it helpful to ask this question before committing to any designs and codes: What is the expected lifespan of my solution? And run, if someone told you they only provide support for a month.