What is Data Completeness? Definition, Examples, and KPIs

Making decisions based on incomplete data is like baking a cake with missing ingredients: not a good idea. You may have the right information on how much butter, sugar, and eggs to add to your batter. But if you don’t realize that the recipe writer omitted flour, you’ll end up with a soupy mess that barely resembles a cake.

The same is true with data. If all the information in a data set is accurate and precise, but key values or tables are missing, your analysis won’t be effective.

That’s where the definition of data completeness comes in. Data completeness is a measure of how much essential information is included in a data set or model, and is one of the six dimensions of data quality. Data completeness describes whether the data you’ve collected reasonably covers the full scope of the question you’re trying to answer, and if there are any gaps, missing values, or biases introduced that will impact your results.

Data completeness matters to any organization that works with and relies on data (and these days, that’s pretty much all of them). For example, missing transactions can lead to under-reported revenue. Gaps in customer data can hurt a marketing team’s ability to personalize and target campaigns. And any statistical analysis based on a data set with missing values could be biased.

Like any data quality issue, incomplete data can lead to poor decision-making, lost revenue, and an overall erosion of trust in data within a company.

In other words, data completeness is kind of a big deal. So let’s dive in and explore what data completeness looks like, the differences between data completeness and accuracy, how to assess and measure completeness, and how data teams can solve common data completeness challenges.

Table of Contents

Examples of incomplete data

Before we get into the nitty-gritty of completeness, let’s discuss a few examples of how companies can encounter incomplete data.

Missing values

Incomplete data can sometimes look like missing values throughout a data set, such as:

- Missing salary numbers in HR data sets

- Missing phone numbers in a CRM

- Missing location data in a marketing campaign

- Missing blood pressure data in an EMR

- Missing transaction in a credit ledger

And the list goes on.

Missing tables

In addition to missing values or entries, whole tables can be unintentionally omitted from a data set, like:

- Product event logs

- Sales transactions for a given product line

- App downloads

- A patient’s entire EMR

- A customer 360 data set

And like missing values, the list goes on!

Data completeness versus data accuracy

Data completeness is closely related to data accuracy, and sometimes used interchangeably. But what determines the accuracy and completeness of organizational data is not one and the same.

Accuracy reflects the degree to which the data correctly describes the “real-world” objects being described. For example, let’s say a streaming provider has 10 million overall subscribers who can access its content. But its CRM unintentionally duplicates the records of the 3 million subscribers who log into the service via their cell phone provider. According to the CRM’s data set, the streaming provider has 13 million subscribers.

No customer data is missing — quite the opposite — but the CRM data does not reflect the real world. It’s not accurate.

In another example, a marketing team at a sportswear company is running a limited-time campaign to test paid ad performance for different products across customer segments. Their marketing software collects all the necessary data points — bidding details, engagement, desired channels, views, clicks, impressions, and demographics — as actual conversions took place. But through a cookie-tracking error, geographic location was lost.

Now, the team can see that swimwear outperformed outerwear, but cannot analyze how customers responded differently based on their location. They can look at the cost-per-click, performance by channel, and customer age, but not geography. Since knowing a customer’s location is important to understand how seasonality impacts demand, the marketing team doesn’t have a full and complete picture of their campaign performance.

In this case, the data is accurate but incomplete.

One note: accuracy is also tied closely to precision. For example, a luxury car company may measure customer revenue rounded up to the nearest dollar, but track pay-per-click ad campaigns by dollar and cent. The smaller or more sensitive the data point, the more precise your measurement needs to be.

Data completeness versus data quality

Data completeness is an element of data quality, but completeness alone does not equal quality. For example, you could have a 100% complete data set — but if the information it contains is full of duplicated records or errors, it’s not quality data.

As we mentioned before, completeness is one of the six dimensions of data quality. In addition to completeness and accuracy, data quality encompasses consistency (the same across data sets), timeliness (available when you expect it to be), uniqueness (not repeated or duplicated), and validity (meets the required conditions).

All of these factors combine to produce quality data, so each is important, but none translate to a full reflection of trusted, reliable data on their own.

How to determine and measure data completeness

Few real-world data sets will be 100% complete, and that’s OK. Missing data isn’t a major concern unless the information that you lack is important to your analysis or outcomes. For example, your sales team likely requires a first and last name to conduct successful outreach — but if their CRM records are missing a middle initial, that’s not a dealbreaker.

The first step to determining data completeness is to outline what data is most essential, and what can be unavailable without compromising the usefulness and trustworthiness of your data set.

Then, you can use a few different methods to assess the quantitative data completeness of the most important tables or fields within your assets.

Attribute-level approach

In an attribute-level approach, you can evaluate how many individual attributes or fields you are missing within a data set. Calculate the percentage of completeness for each attribute that’s deemed essential to your use cases.

Record-level Approach

Similarly, you can evaluate the completeness of entire records or entries in a data set. Calculate the percentage of complete records to understand your ratio of complete records.

Data sampling

If you’re working with large data sets where it’s impractical to evaluate every attribute or record, you can systematically sample your data set to estimate completeness. Be sure to use random sampling to select representative subsets of your data.

Data profiling

Data profiling uses a tool or programming language to surface metadata about your data. You can use profiling to analyze patterns, distributions, and missing value frequencies within your data sets.

Common data completeness challenges

Incomplete data is a mystery by nature. Some piece of information should be present, and it’s not — so data teams need to find out what happened, why it happened, and how to make sure important data will be present and accounted for going forward.

Data can go missing for nearly endless reasons, but here are a few of the most common challenges around data completeness:

Inadequate data collection processes

Data collection and data ingestion can cause data completion issues when collection procedures aren’t standardized, requirements aren’t clearly defined, and fields are incomplete or missing.

Data entry errors or omissions

To err is human — so data collected manually is often incomplete. Data may be entered inconsistently, incorrectly, or not at all, leading to missing data points or values.

Incomplete data from external sources

When you ingest data from an external source, you lack a certain amount of control over how data is structured and made available. Different sources may structure data inconsistently, which can lead to missing values within your data sets. Data may be delayed or become unavailable altogether, leaving your data set unexpectedly incomplete when you expect it to be fresh and comprehensive.

Data integration challenges

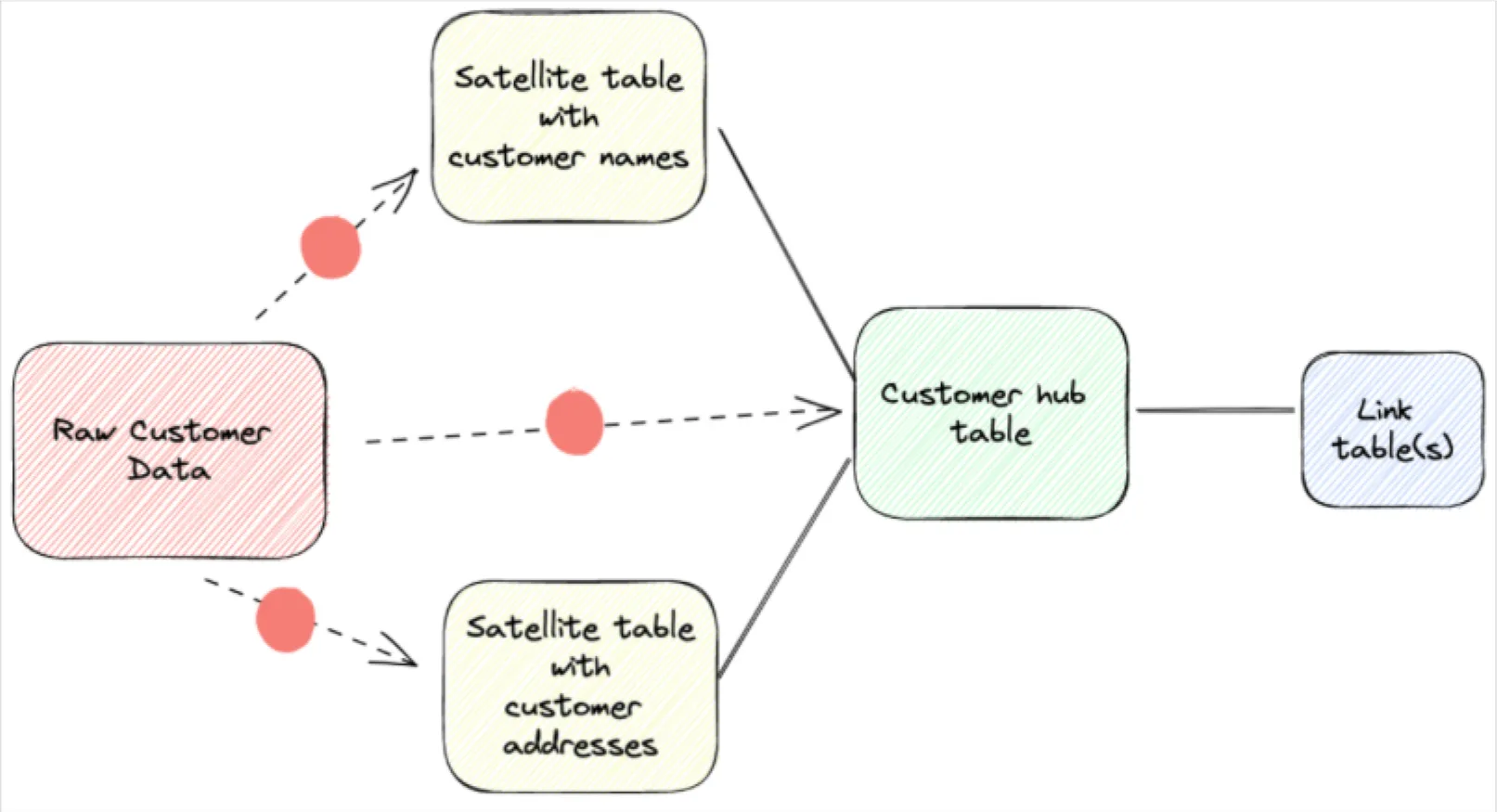

Merging data together from multiple sources, even within your own tech stack, can cause misaligned data mapping or incompatible structures. This can lead to data that’s incomplete in one system, even if it’s present in another.

Inefficient data validation and verification

Data tests and quality checks can provide some coverage but typically fail to comprehensively detect data completeness at scale, especially without automated monitoring and thresholds based on historical patterns (more on this in a minute).

How to ensure data completeness across your stack

There are several approaches companies can take to ensure data completeness, from improving processes to implementing technology.

Data governance and standardization

Data governance can include well-documented data collection requirements and process standardization, which helps reduce instances of missing data at ingestion. And by defining clear data ownership and responsibility, accountability for all dimensions of data quality — including completion — improves.

Regular data audits and validation processes

Systematic validation processes, like data quality testing and audits, can help data teams detect and identify incomplete data, rather than downstream consumers.

Automated data collection and validation tools

Tools that provide automated validation can help identify gaps and even fill in the blanks within incomplete data. For example, address verification tools can supply missing ZIP codes when a customer neglects to provide theirs when filling out a form.

User input and data entry validation techniques

Validation techniques, like requiring a certain form field to be completed or ensuring phone numbers don’t contain alphabetical characters, can help reduce instances of human error that commonly lead to incomplete data.

The comprehensive way to ensure data completeness: data observability

Ultimately, processes like governance and manual testing will only go so far. As organizations ingest more data from more sources through increasingly complex pipelines, automation is required to ensure comprehensive coverage for data completeness.

Data observability provides key capabilities, such as automated monitoring and alerting, which leverage historical patterns in your data to detect incomplete data (like when five million rows turn into five thousand) and notify the appropriate team. Data owners can also set custom thresholds or rules to ensure all data is present and accounted for, even when introducing new sources or data sets.

Additionally, automated data lineage and other root cause analysis tooling span across your entire data environment, from ingestion to BI tooling. This helps your data team quickly identify what broke, where, and who needs to be notified that their data is missing. Observability also understands actions like SQL query history for missing tables, relevant GitHub pull requests, and dbt models that may be impacted by incomplete data.

Ultimately, data observability helps ensure the data team, not the business users or data consumers, is the first to know when data is incomplete. This allows for a proactive response, minimizes the impact of incomplete data, and prevents a loss of trust in data across the organization.

Ready to learn how data observability can ensure data completeness — and improve overall data quality — at your organization? Contact our team to schedule a personalized demo of the Monte Carlo data observability platform.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

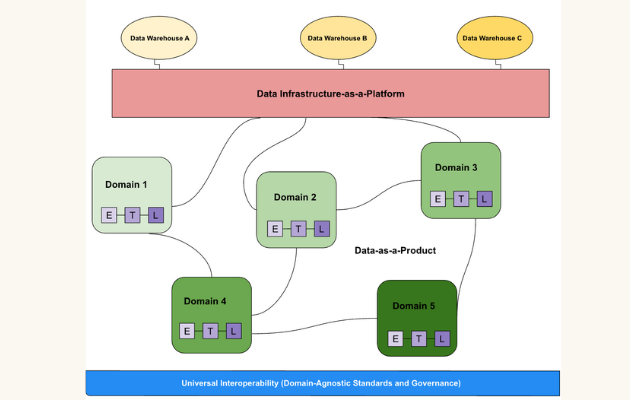

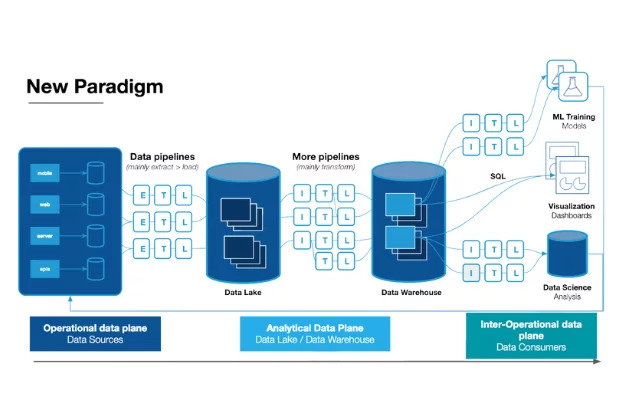

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage